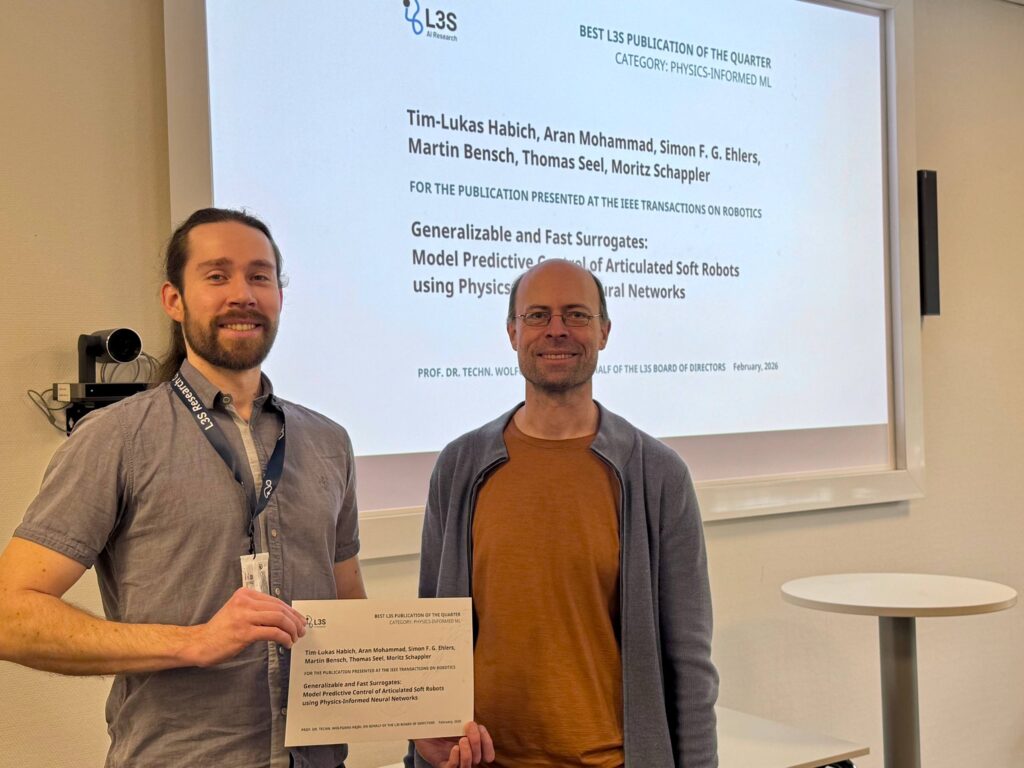

L3S Best Publication of the Quarter (Q3+Q4/2025)

Category: Physics-informed ML

Generalizable and Fast Surrogates: Model Predictive Control of Articulated Soft Robots using Physics-Informed Neural Networks

Authors: Tim-Lukas Habich; Aran Mohammad; Simon F. G. Ehlers; Martin Bensch; Thomas Seel; Moritz Schappler

Published in IEEE Transactions on Robotics

The paper in a nutshell:

The work addresses the problem of generalizability of learned (black-box) models for unknown system domains by incorporating physical knowledge. Although the research focuses on soft robotics, the proposed physics-informed neural network is applicable to a wide range of technical areas.

What is the potential impact of your findings?

Physics-informed neural networks (PINN) can be promising in many technical areas. This is especially true when physical model knowledge (e.g., differential equations) is available, but the system models are too slow for the application. It may also be the case that the physical model is inaccurate and can be improved with a small amount of real-world data. The proposed PINN can serve as a fast, accurate, and generalizable surrogate model of dynamical systems, enabling computationally-intensive applications such as model predictive control or fast simulation.

Link to the full paper: https://ieeexplore.ieee.org/document/11242009