MediaFutures – Data-driven Innovation Hub for the Media Value Chain

Combating Disinformation with Art and Data

What ideas do start-ups and artists have for tackling disinformation with the vast amount of data available? If possible, with the involvement of citizens, especially from groups with little social influence? Over the past three years, MediaFutures, the European Data-driven Innovation Hub for the Media Value Chain, has supported 51 start-ups and small and medium-sized enterprises, and 43 artists with a total of 2.5 million euros to implement their ideas in three open calls. The selected applicants took part in a six-month support programme that included financial support, mentoring and further training, for example, on ethical and legal issues. The results are digital products, services and artworks that use data in innovative and inclusive ways to strengthen citizens’ media literacy and counteract disinformation. All MediaFutures projects can be found on the hub’s website. Binaire presents three award-winning projects as examples: Soft Evidence, Edit Wars and The Oracle Network.

MediaFutures was part of the S+T+ARTS ecosystem, an initiative of the European Commission to promote alliances between science, technology and the arts.

BEST START-UP and BEST ARTIST in the START-UP MEETS ARTIST track (Second cohort of the competition)

The Oracle Network

The Oracle Network deals with misinformation and disinformation in a stimulating way. For example, the team has distributed urban augmented reality art in the Romanian city of Cluj Napoca in places that represent certain minorities. Augmented reality (AR) illustrates disinformation about these groups. To reach the next AR point, users have to solve a puzzle. This takes participants to the central exhibition, a room with interactive artificial intelligence art installations providing knowledge about disinformation. The urban art is aimed at a broad audience.

The team behind the Oracle Network wants to combat misinformation and disinformation on the internet with creativity. The goal is an innovative system that reduces the impact of fake news on public opinion and involves artists, programmers, journalists and the general public.

The Oracle Network has also developed an augmented reality app to better reach people susceptible to fake news. Users hunt fake news in the form of mind bugs. They are educated step-by-step and with examples about disinformation and how it works.

Artists have designed the mind bugs representing fake news for the Oracle Network’s AR app.

BEST ARTISTS in the ARTISTS FOR MEDIA track (Second cohort of the competition)

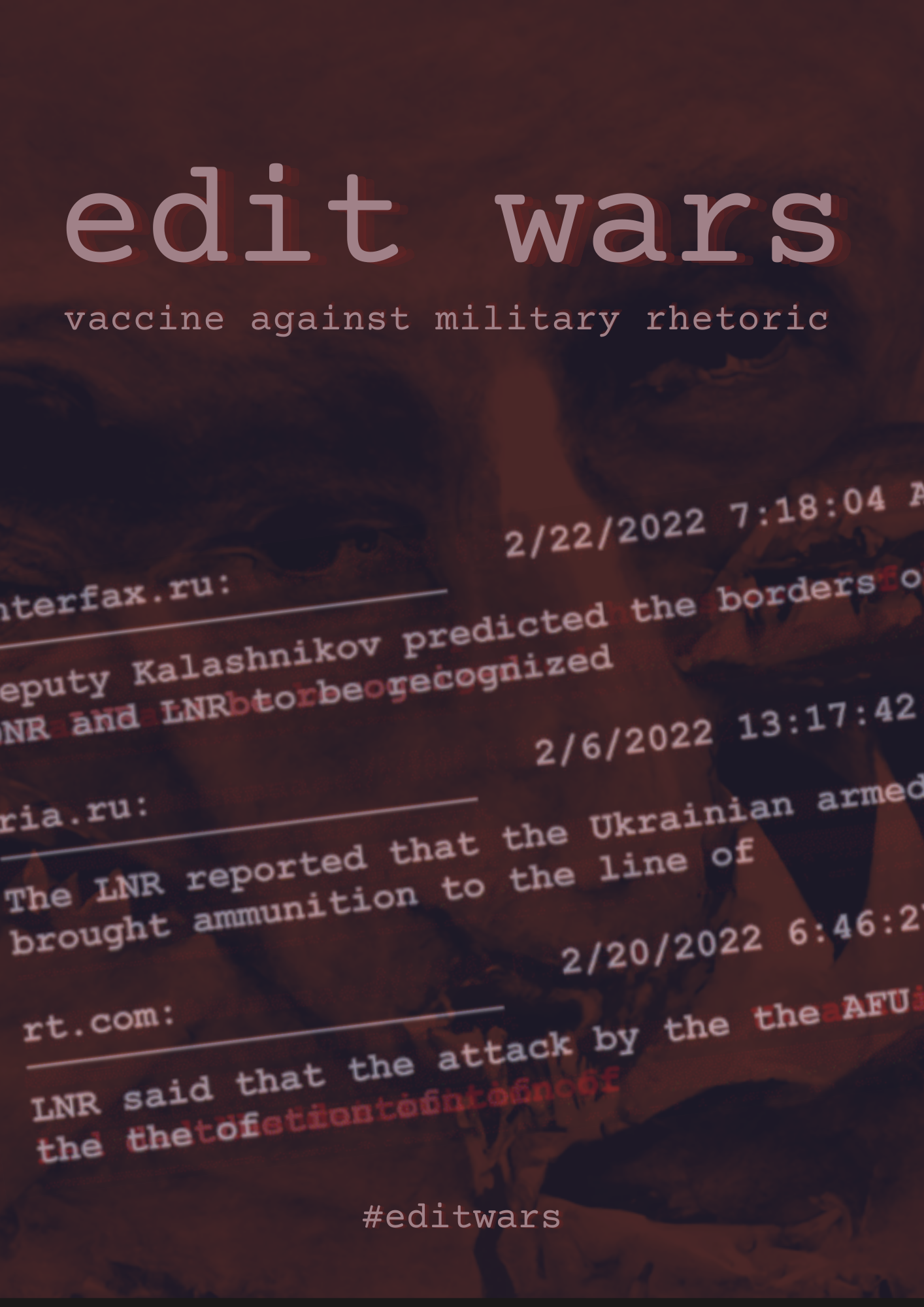

Edit Wars

Edit Wars is an interactive art project about manipulating mass opinion in Russia. The project deals with aggressive narratives in the government-controlled media that disconnect public perception from reality. Edit Wars combines research with art: The team uses quantitative and qualitative data analysis methods to achieve meaningful results for the artistic presentation. In the creative part of the project, the results are transferred into an interactive multimedia medium.

The influence of information today can hardly be underestimated: as a weapon, a tool of manipulation, and a means of exerting pressure – also for government purposes. This is (not only) the view of the Edit Wars team. Authoritarian states exert direct and indirect influence on public opinion to secure their information monopoly. Their toolbox includes the expulsion of people critical of the government, the banning of independent media and the blocking of websites. Critical voices are silenced with laws that punish any information that does not come from an official source as “false news” (under criminal law).

What happens when there is such a state monopoly on information? What do citizens experience in the echo chamber of propaganda? To what extent can the Russian pro-government media distort the reality of the war between Russia and Ukraine? The Edit Wars project attempts to answer these questions in a data-driven and artistic way by showing how destructive propaganda narratives develop. Edit Wars is based on analysing headlines in Russian-language online media and covers the period from January 1 to June 30, 2022. More than two million articles were examined. Topics and mentions relevant to the study’s subject were filtered out and identified. The project results are presented as an interactive installation and a website with a research presentation and interactive elements.

Edit Wars analysed 250,000 headlines in Russian online media between January and July 2022. The results also serve as a basis for artistic exploitation to draw attention to the manipulation of public opinion in Russia.

BEST ARTISTS in the ARTISTS FOR MEDIA track (First cohort of competition)

Soft Evidence

Soft Evidence by Ania Catherine and Dejha Ti is a series of slow visual scenes that never happened – films manipulated by machines trained to lie. Soft Evidence uses deepfakes as art – usually a highly political form, but here, purposely apolitical to provide a neutral ground for conversations about audiovisual manipulation. The emotions and questions the series evokes guide viewers in new directions, giving them tools and strategies to navigate this digital terrain.

Using artificial intelligence, computer vision, machine learning and deep learning, the artists have created synthetic media vignettes (deepfakes) that show no trace of audiovisual manipulation. Soft Evidence addresses two critical aspects of the deepfake problem: firstly, the ability to depict someone doing something they have never done, being in a place they have never been, or saying something they have never said. Secondly, there is the introduction of plausible deniability, which allows people to evade responsibility for actions captured in photos or videos.

Soft Evidence contributes to the global discussion about the unequal privilege of inventing “truths”. The increasingly complicated field of “visual evidence” shows that populations on the margins of society, furthest removed from the financial, technological and political centres of power, are increasingly vulnerable.

Scenes from Soft Evidence that never happened. Synthetically created as deepfakes.

Contact

Alexandra Garatzogianni

L3S employee Alexandra Garatzogianni was the coordinator of MediaFutures during the project period from September 2020 to August 2023. Ten organisations from six European countries were involved in the project.