©The image generated by ChatGPT for single-cell basic models

Foundation Models in Biomedicine

AI Driving Innovation in Medicine

From decoding DNA to supporting clinical decision-making, so-called foundation models are opening up entirely new horizons for biomedical research, diagnostics and therapy.

Well-known systems such as ChatGPT, Claude or DALL·E have already shown how powerful modern AI can be. These models are based on transformers – neural networks trained on vast datasets: internet content, licensed material, partner contributions, curated sources such as books, code and scientific journals, and in some cases even artificially generated data.

Although pre-training requires enormous time and computational resources, the investment pays off. Once trained, the models can be adapted with relative ease – through fine-tuning or targeted prompts – to perform a wide variety of tasks: writing, debugging code, generating images or answering complex questions. Biomedical research is now harnessing this potential for its own purposes.

Breakthroughs in Medicine and Biology

In the life sciences, foundation models are learning to detect patterns across multiple levels – from clinical records and radiology scans to genes, proteins and even individual cells.

- Text-based models such as ClinicalBERT or BioBERT, trained on large collections of clinical notes, electronic health records and scientific articles, already achieve near-expert performance in diagnostics and prognosis.

- Medical imaging models like MedSAM, SAMed or UNI, trained on millions of radiology and pathology images, use techniques such as prompt-based segmentation and uncertainty estimation to improve accuracy in organ segmentation, tumour localisation and disease classification.

- Genomics models, including DNABERT, Nucleotide Transformer, and GeneMask (developed at L3S), help decipher the “grammar” of DNA, identifying regulatory regions and potentially disease-relevant mutations.

- Protein models such as AlphaFold, ProtGPT2 and ProteinBERT, trained on millions of protein sequences, predict protein structures with near-experimental accuracy, uncover evolutionary patterns and functional properties, and even support the design of novel proteins – a crucial step for drug discovery and synthetic biology.

Research at L3S and CAIMED: AI at the Cellular Level

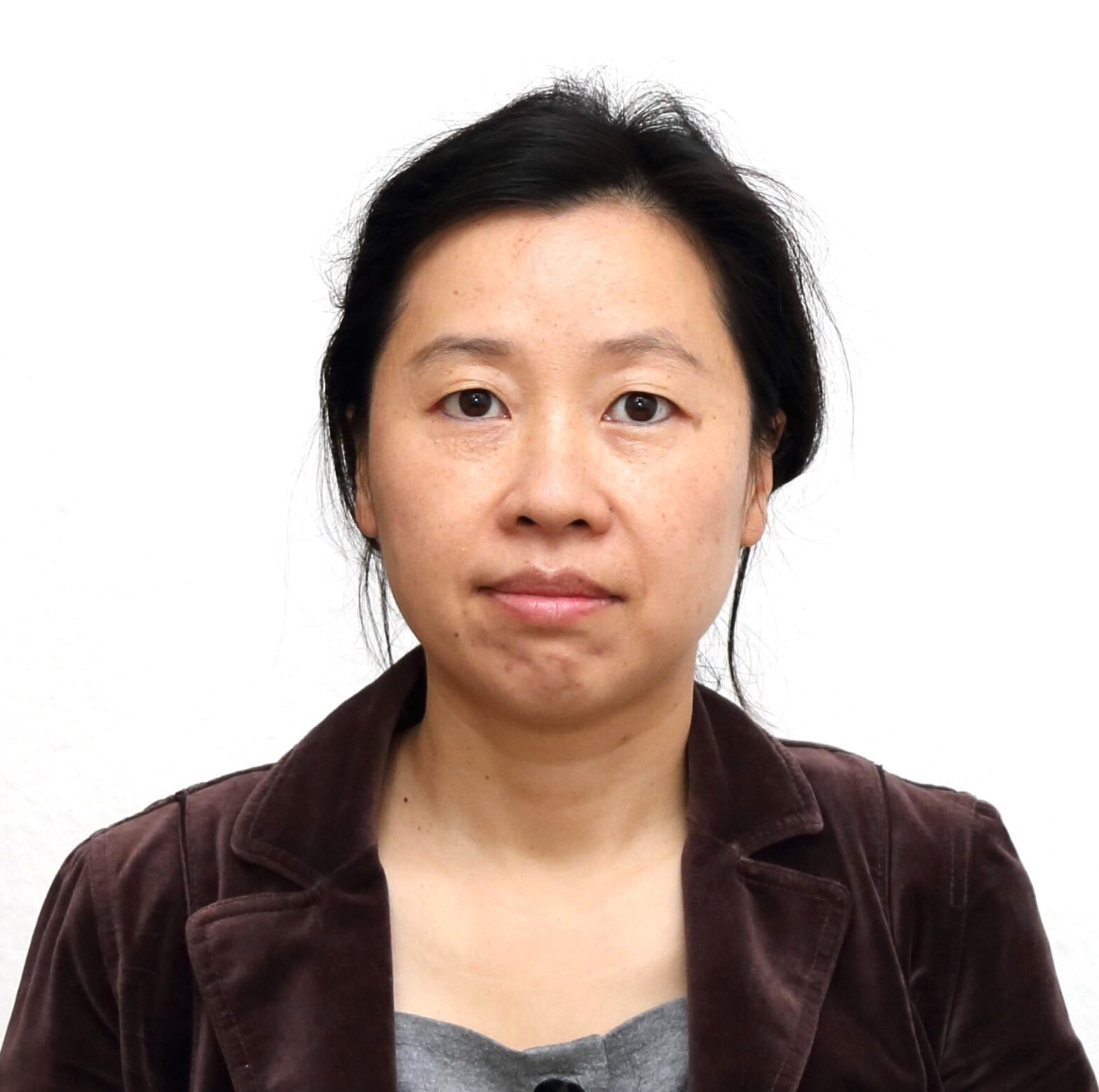

At the Lower Saxony Center for AI & Causal Methods in Medicine (CAIMED), Dr Michelle Tang and her colleagues from the L3S Research Center are developing single-cell foundation models. These are trained on hundreds of millions of data points from single-cell analyses – measurements that reveal which genes are active in individual cells.

Such models can distinguish cell types, shed light on disease mechanisms and predict how cells respond to drugs. In systematic comparisons of leading models such as scGPT, scFoundation and Geneformer, scFoundation stood out for its strong performance in cell type recognition and knowledge transfer to new datasets.

“We now plan to develop our own models and translate them into concrete medical applications,” says Tang. “Collaboration within CAIMED is central to this effort.”

From the Lab to the Clinic

Biomedical foundation models are still in their infancy. Future developments aim to make them more energy-efficient, transparent and versatile. Particularly promising are new architectures capable of processing language, images and temporal data simultaneously. At the same time, issues of data protection, fairness and regulatory compliance must be addressed.

“If these systems can be deployed safely, explainably and responsibly, they will make the leap into clinical practice,” Tang explains. “That could transform medicine in a lasting way.”

Contact

Dr. Michelle Tang

Dr. Michelle Tang is a researcher at L3S. She specialises in the intersection of artificial intelligence and biomedicine, particularly biological foundation models for medical applications.